Back to The EDiT Journal

From Pilots to Practice: Why Most AI in Education Never Scales

The pilot paradox in education AI

AI in Education

Schools & Universities

In this article

The real reason AI pilots stall: experimentation without intent

AI disrupts education's institutional legitimacy

Innovation within a fragile balance (and why governance matters)

From proof of concept to enterprise reality

Why governance is the enabler, not the blocker

Building institutional momentum beyond pilots

From hype to impact

Across higher education, generative AI pilots are everywhere. Institutions are experimenting with chatbots, content generators, tutoring tools, and assessment assistants. Yet very few initiatives move beyond proof of concept.

In our work across universities, ministries, and education systems globally, one pattern is consistent: most generative AI pilots in education never achieve scale. When they stall, it is rarely because the technology fails. It is because the conditions around it do.

This article explores why AI experimentation in education so often leads to dead ends, and what leaders must rethink to move from pilots to practice. The answer is not more tools. It is readiness, governance, and institutional design.

The real reason AI pilots stall: experimentation without intent

Most AI initiatives in education start with curiosity or urgency rather than strategy. A faculty member tests a tool. A department launches a pilot. An innovation unit runs a workshop or hackathon.

These efforts are valuable, but they often happen in the absence of, or disconnected from, any shared institutional vision for AI.

Without clarity on:

- What problem AI is meant to solve

- Where it should and should not be applied

- How success will be measured

- Who owns outcomes, data, and risk

Pilots remain isolated experiments. They generate momentum, but they rarely accumulate into institutional change.

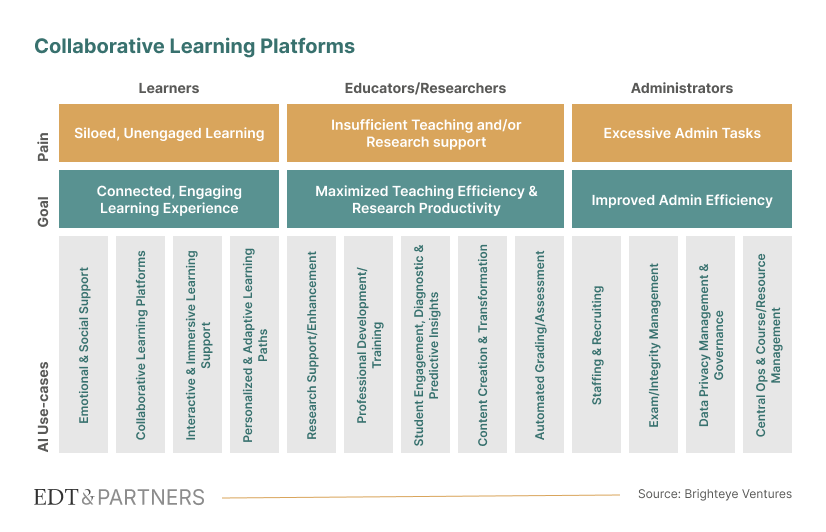

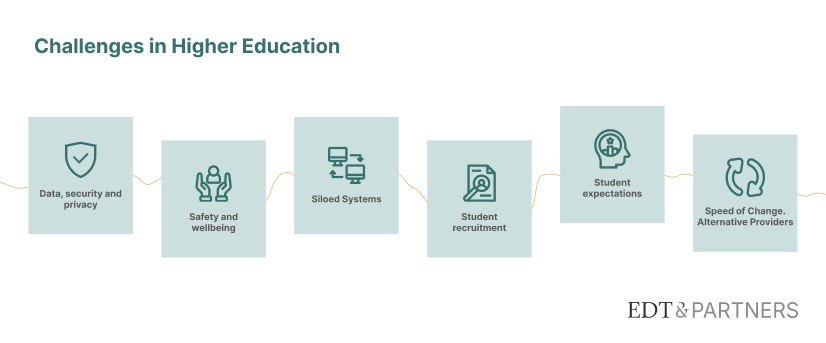

This lack of intent is amplified by broader pressures already facing higher education, from privacy concerns to student wellbeing, siloed systems, and rising expectations around experience and recruitment.

AI adoption at scale requires more than experimentation. It requires intentional design.

AI disrupts education's institutional legitimacy

One of the most common mistakes institutions make is treating AI adoption in education as if it were no different from digital transformation, or indeed, no different from AI adoption in other sectors.

Education is deeply human-centered and publicly accountable. Pedagogy matters. Academic values matter. Learners are not simply users.

Education is also different in a deeper way. It is one of the only sectors where AI disrupts the core mechanism of institutional legitimacy: the relationship between knowledge, effort, and certification.

In healthcare, AI raises urgent questions of safety, bias, and accountability. In education, generative AI challenges something more fundamental. It alters how learning is evidenced, how mastery is assessed, and what credentials are understood to represent. When systems can produce fluent outputs without understanding, the signals education depends on begin to break down.

Institutions are not simply implementing new tools. They are confronting a shift in the foundations of academic authority.

At the same time, education is being shaped by AI systems that evolve at extraordinary speed. Model updates arrive faster than institutions can assess, govern, or integrate them. Many AI providers now reach students and educators directly, often outside institutional channels. Adoption is happening at the edges of the institution before the institution can define the rules of legitimacy.

This creates a fundamental tension. Fast-moving AI meets slow, accountable systems, where authority depends on the ability to certify human learning.

Many pilots struggle because they are not designed for this reality. Scaling requires fit-for-purpose AI that aligns with pedagogy, governance, and institutional responsibility from the beginning.

Innovation within a fragile balance (and why governance matters)

AI innovation in education operates within a fragile balance. Institutions face pressure to experiment, but also an obligation to ensure systems can be trusted, understood, and governed over time.

Responsible scale requires clear accountability, visibility into how systems behave in practice, and alignment with emerging regulation. These foundations are not add-ons. They determine whether AI can move beyond pilots.

Many current use cases remain shallow. Chatbots and tutoring tools can support existing models, but they often scale convenience rather than learning impact. Without governance and observability, institutions risk adopting systems they cannot evaluate, steer, or defend.

AI holds real potential to support deeper learning, reflection, critique, and new forms of engagement. Realizing that potential depends on systems that remain legible to educators, adaptable to institutional policy, and grounded in learning science.

Education requires innovation and responsibility together. Without governance, pilots remain experiments. With governance, institutions can build toward meaningful scale.

From proof of concept to enterprise reality

Many pilots succeed in controlled environments. They demonstrate that something is possible.

Scaling introduces different questions.

Is the system sustainable? Is it secure? Who owns the data? How does it integrate into existing infrastructure? What happens when policies change or regulations tighten? Does the system align with evolving academic standards and credible signals of learning?

The gap between proof of concept and production often reveals unresolved issues:

- Data privacy and sovereignty

- Model governance and oversight

- Integration with institutional systems

- Long-term cost and operational ownership

- Alignment with academic standards and assessment

When these questions surface too late, pilots stall or are abandoned.

In education, AI implemented without governance rarely scales because it can quickly become destabilizing before institutions have clarity on what they believe learning and certification should mean in an AI-shaped world.

Why governance is the enabler, not the blocker

Governance is often cited as the reason AI adoption in education slows down. In practice, the opposite is true.

Clear governance builds trust and provides shared standards around acceptable use, data, accountability, and oversight. It allows institutions to experiment in ways that accumulate rather than reset.

Most importantly, governance helps institutions pause to redefine what credible learning evidence and mastery can mean in an AI-shaped environment, rather than leaving those meanings to erode by default.

Responsible AI is not about restricting progress. It is about creating the conditions under which progress becomes scalable.

Building institutional momentum beyond pilots

Moving from experimentation to real impact is rarely the result of a single breakthrough. It is a deliberate process.

Institutions that make progress clarify where AI can genuinely add value, build foundational governance early, and design pilots that connect to pedagogical goals and operational realities.

Over time, standards emerge, ownership becomes clearer, and capabilities integrate into everyday practice. What began as experimentation becomes embedded in how institutions teach, operate, and evolve.

This is not about moving faster. It is about building momentum in a way that is coherent, sustainable, and legitimate.

From hype to impact

The question is no longer whether AI will shape education. It already is.

The real question is whether institutions will remain stuck in cycles of experimentation or build the foundations for meaningful, responsible scale.

Moving from pilots to practice demands more than enthusiasm. It requires clarity of purpose, institutional readiness, and governance by design.

At EDT&Partners, we believe education needs AI that is not only safe and compliant, but also institutionally legible. The systems that scale will be those governed deeply enough to redefine what learning, mastery, and certification can mean in an AI-shaped world — before those meanings erode by default.