Back to The EDiT Journal

A Solution Architect’s Guide: Multi-Account AWS Deployments [Part 2: App Deployment & Scaling]

Part 2 of a two-part guide for cloud architects, DevOps engineers, and technical leaders on automating multi-account AWS deployments with speed and security.

Cloud & Infrastructure

Tech Teams

In this article

Step 1: App Deployment

Step 2: Integration Testing

Step 3: Update Existing Customers

Step 4: Strengthen Security

Step 5: Optimize Costs

Step 6: Measure ROI and Business Impact

Step 7: Define a Future Roadmap

Key Takeaways

Conclusion

Welcome to the second instalment of A Solutions Architect’s Guide to Multi-Account AWS Deployments.

Part One outlined the foundational steps for moving from manual processes to scalable automation, including building with Terraform, bootstrapping new accounts, and modernizing with serverless-first architectures. This second part turns to a critical phase: application deployment—and how to make it consistent, secure, and scalable across multiple AWS environments.

If you have not read Part 1 you can do so here - A Solution Architect’s Guide: Multi-Account AWS Deployments [Part 1: Foundations]

Modern application deployment rarely follows a one-size-fits-all model—whether it’s a Lambda function, a Dockerized ECS service, or a CloudFront-distributed Node app, each brings its own requirements. Without a unified strategy, this complexity can lead to inconsistent environments, manual fixes, and limited visibility into application health. Composite GitHub Actions solve this by providing reusable workflows that adapt to different targets, standardizing builds, enforcing testing, and automating validation. The result is fewer errors, stronger rollback strategies, and a DevOps culture where automation, consistency, and reliability are built in from the start.

In this guide, we’ll walk through a real-world composite action example, examine the common pitfalls to avoid, and highlight the solutions that streamline multi-target deployments. The outcome is a clear roadmap for transforming fragmented, manual processes into fast, secure, and repeatable CI/CD pipelines at scale. At EDT&Partners, we see reliable deployments and testing as more than technical steps; they are part of using technology purposefully to make solutions—across EdTech and beyond—scalable, reliable, and impactful.

Step 1: App Deployment

Composite Action example

Application deployment is where complexity often shows up first. Different workloads—whether a Lambda function, a Dockerized ECS service, or a CloudFront-distributed Node app—each have unique requirements. Without standardization, processes drift, manual fixes creep in, and risks grow. The aim is to unify deployments with an approach that adapts to each target while keeping consistency, reliability, and automation at the core.

runs:

using: "composite"

steps:

- name: Build Lambda

if: env.DEPLOY_TYPE == 'LAMBDA'

uses: EDT-Partners-Tech/action-build-lambda@v1.0.0

- name: Build Docker (ECS)

if: env.DEPLOY_TYPE == 'ECS'

uses: EDT-Partners-Tech/action-build-docker@v1.0.0

- name: Build Node (CloudFront)

if: env.DEPLOY_TYPE == 'CLOUDFRONT'

uses: EDT-Partners-Tech/action-build-node@v1.0.0Common Problems You'll Face

- Different deployment requirements per application type

- Inconsistent build and deployment processes

- Manual intervention is required for deployments

- No standardized testing procedures

- Lack of rollback mechanisms

- Complex multi-target deployment management

How to Solve Them

- By integrating DevOps Best Practices

- Unified deployment system supporting multiple targets

- Standardized build and deployment processes

- Automated testing and validation

- Non-disruptive deployment strategies

- Built-in rollback capabilities

- Environment-aware deployment configurations

Step 2: Integration Testing

Once deployments are automated, the next step is to validate them. By integrating Cypress tests into your CI/CD pipeline, you can automatically check that applications work correctly in each environment after every deployment. This ensures consistency and reduces risk when handing environments over to customers.

How to Set It Up

Use Cypress to run automated tests against the environment with every new deployment:

- name: Run Cypress tests

run: |

npx cypress run --spec "cypress/e2e/${{ inputs.TestName }}.cy.js" \

--project . \

--reporter mochawesome \

--reporter-options overwrite=true,html=true,json=true

- name: Merge Mochawesome JSON reports

if: always() # Upload even if tests fail

run: |

npx mochawesome-merge cypress/reports/*.json > cypress/merged-report.json

npx marge cypress/merged-report.json --reportDir=cypress/reports --reportFilename=summary-report

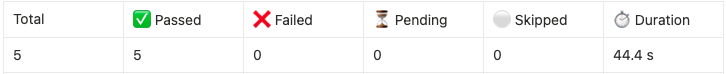

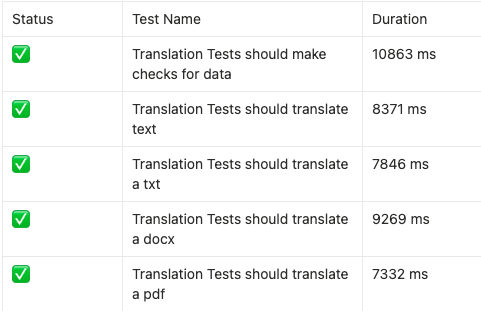

Example Cypress Test Report

Summary

Common Problems You'll Face

Before implementing automated integration tests, we lacked visibility and control over:

- Which version of the application was deployed in each customer account?

- Whether the deployed version was functioning as expected.

- Consistency across environments after changes.

This created risks during handover to customers, as we couldn’t guarantee that the last deployment was fully functional or tested.

How to Solve Them

By integrating Cypress tests into the CI/CD pipeline:

- We created a single source of truth for deployment validation.

- Every environment is tested with the same criteria, increasing confidence in each release.

- We ensured repeatable, automated post-deployment checks, allowing us to scale across multiple AWS accounts and customer environments safely.

Step 3: Update Existing Customers

You’ll also need a way to push updates to existing customer environments. The simplest approach is to use GitHub Actions workflows to trigger infrastructure or code changes through reusable workflows.

Common Problems You'll Face

- No repeatable, reliable update process

- Lack of version tracking across customer environments

- Inconsistent update procedures

- Risk of failed updates

- No automated validation of updates

- Poor visibility into the update status

How to Solve Them

By integrating DevOps Best Practices

- Automated update deployment through GitHub Actions

- Version tracking and change management

- Standardized update procedures

- Automated validation and testing of updates

- Clear update status monitoring

- Rollback capabilities for failed updates

Step 4: Strengthen Security

Security should be a core part of your architecture from the very beginning. Here are the key measures you’ll want to put in place when building a multi-account AWS setup:

Identity and Access Management

- AWS IAM Roles with the least privilege principle

- Short-lived credentials using AWS STS

- Cross-account access through role assumption

- No long-term access keys in application code

- Instance Profile for runtime execution

- Identity Providers for a trust relationship with GitHub

Network Security

- VPC isolation for each customer environment

- Private subnets for sensitive resources

- Security groups with minimal required access

- Network ACLs for additional network security

Secrets Management

- AWS Secrets Manager for sensitive credentials

- Parameter Store for configuration

- Encryption at rest using AWS KMS

- Secrets rotation policies

Compliance and Auditing

- AWS CloudTrail for API activity logging

- AWS Config for resource compliance

- VPC Flow Logs for network monitoring

- AWS GuardDuty for threat detection

Step 5: Optimize Costs

Managing costs across multiple AWS accounts can quickly get out of control if left unchecked. To keep your architecture efficient and scalable, implement these AWS cost optimization best practices:

Infrastructure Optimization

- Auto-scaling based on demand

- Spot instances for non-critical workloads

- Reserved instances for baseline capacity

- Serverless components were appropriate

Resource Management

- Resource tagging for cost allocation

- Automated cleanup of unused resources

- Right-sizing instances and services

- Multi-AZ only where required

Cost Monitoring

- AWS Cost Explorer for trend analysis

- AWS Budgets for cost control

- CloudWatch alarms for unusual spending

- Regular cost reviews and optimization

Step 6: Measure ROI and Business Impact

After implementing an automated multi-account strategy, you should expect to see measurable returns in both efficiency and cost savings.

Time Savings

- Deployment time: Reduced from 3 weeks to 30 minutes

- Engineering hours: 75% reduction in maintenance time

Cost Reduction

- Infrastructure costs: 40% reduction through automation

- Operational overhead: 95% decrease in manual tasks

- Resource utilization: 70% improvement

Step 7: Define a Future Roadmap

Automation doesn’t stop once you’ve built your initial multi-account strategy. To keep improving efficiency, cost control, and customer experience, consider adding enhancements like these:

Enhanced Deployment Strategies

- Canary Deployments: Gradually rolling out changes

- Blue/Green Deployments: Zero-downtime updates

- Feature Flags: Fine-grained feature control

- Automated Rollbacks: Instant recovery from issues

Cost Management

- Predictive Cost Analysis: ML-based cost forecasting

- Per-Feature Cost Tracking: Granular cost attribution

- Automated Cost Optimization: Smart resource scaling

- Budget Controls: Automated cost governance

Administration and Monitoring

- Centralized Admin Dashboard: Single-pane-of-glass management

- Advanced Metrics: Enhanced observability

- Automated Reporting: Scheduled status updates

- Predictive Monitoring: Early issue detection

Customer Experience

- Self-Service Portal: Customer-managed deployments

- Release Notes Automation: Automated change tracking

- Custom Dashboards: Per-customer analytics

- Integration APIs: Extended platform capabilities

Key Takeaways

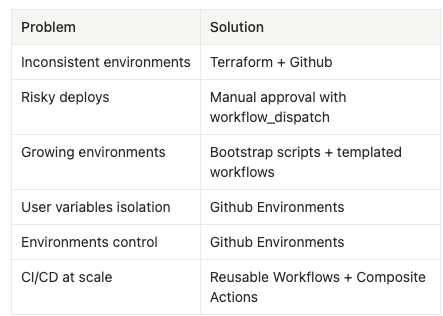

When moving from manual processes to automated multi-account deployments, a few recurring challenges tend to come up. The table below highlights the most common problems you’ll likely encounter along the way, along with the practical solutions that worked best in our setup.

Conclusion

This two-part series on multi-account AWS deployments has walked through the full journey: from laying the foundation with Terraform and modernizing infrastructure (Part 1), to standardizing application deployments, integrating testing, securing environments, and measuring ROI (Part 2).

Together, these steps form a repeatable blueprint for turning manual, error-prone processes into fast, secure, and scalable deployments across multiple AWS accounts. With the right combination of automation, infrastructure as code, and CI/CD best practices, multi-account AWS deployments don’t have to be slow, risky, or inconsistent. Instead, they can become fast, secure, and repeatable—setting your teams and customers up for success from day one.

As organizations across industries—and especially in EdTech—face increasing demands for speed, reliability, and purposeful use of technology, approaches like this are no longer optional; they’re foundational.

If you’re tackling similar challenges or planning a multi-account strategy, we’d love to hear from you. Reach out to explore how to make it faster, safer, and fully automated.